The Challenge

Large Language Models (LLMs) are revolutionizing AI applications, but they come with significant costs. Every API call to models like GPT-4 incurs charges, and as usage scales, these costs can become prohibitive.

- High operational costs for AI-powered applications

- Redundant computations for similar or identical queries

- Scaling limitations due to budget constraints

Our Solution

Airbox AI's revolutionary Route Caching technology intelligently caches LLM responses, eliminating redundant API calls and dramatically reducing costs.

- Cut LLM costs by up to 95%

- Seamless integration with existing AI applications

- Scale your AI capabilities without scaling costs

How Route Caching Works

Our intelligent caching system identifies and eliminates redundant LLM calls, providing identical responses without the cost.

Meet Our Leadership

The three founders of FDL – and developers of Airbox – have a combined 150 years of experience in the private and public sector worlds related to tech. Our experiences are diverse enough that we can think "out of the box" in ways that would not be possible if we were clones of each other.

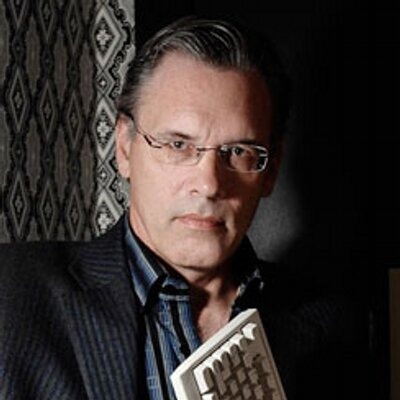

Bob Cringely

Chief Technology Officer

A Silicon Valley veteran, with tech product managerial experience as the 12th employee of Apple and 29th employee of Adobe Systems. He also has decades of experience as a tech journalist and filmmaker.

Bob Litan

CEO

A lawyer-economist with research, executive, and legal experience as Vice President at the Brookings Institution, the Kauffman Foundation, and Bloomberg Government. Author of over 30 books and 250 articles.

That is certainly the case with Airbox. The same will be true for the AI infrastructure products we look forward to bringing to market in the future.

Get in Touch

Interested in learning how Airbox AI can help your organization cut LLM costs? Schedule a demo or reach out to our team.

Phone

+1 (843) 860-2851

bcringely@airboxai.com